IRISformer: Dense Vision Transformers

for Single-Image Inverse Rendering in Indoor Scenes

CVPR 2022 (Oral presentation)

- 1UC San Diego

- 2Qualcomm AI Research

Overview

Indoor scenes exhibit significant appearance variations due to myriad interactions between arbitrarily diverse object shapes, spatially-changing materials, and complex lighting. Shadows, highlights, and inter-reflections caused by visible and invisible light sources require reasoning about long-range interactions for inverse rendering, which seeks to recover the components of image formation, namely, shape, material, and lighting.

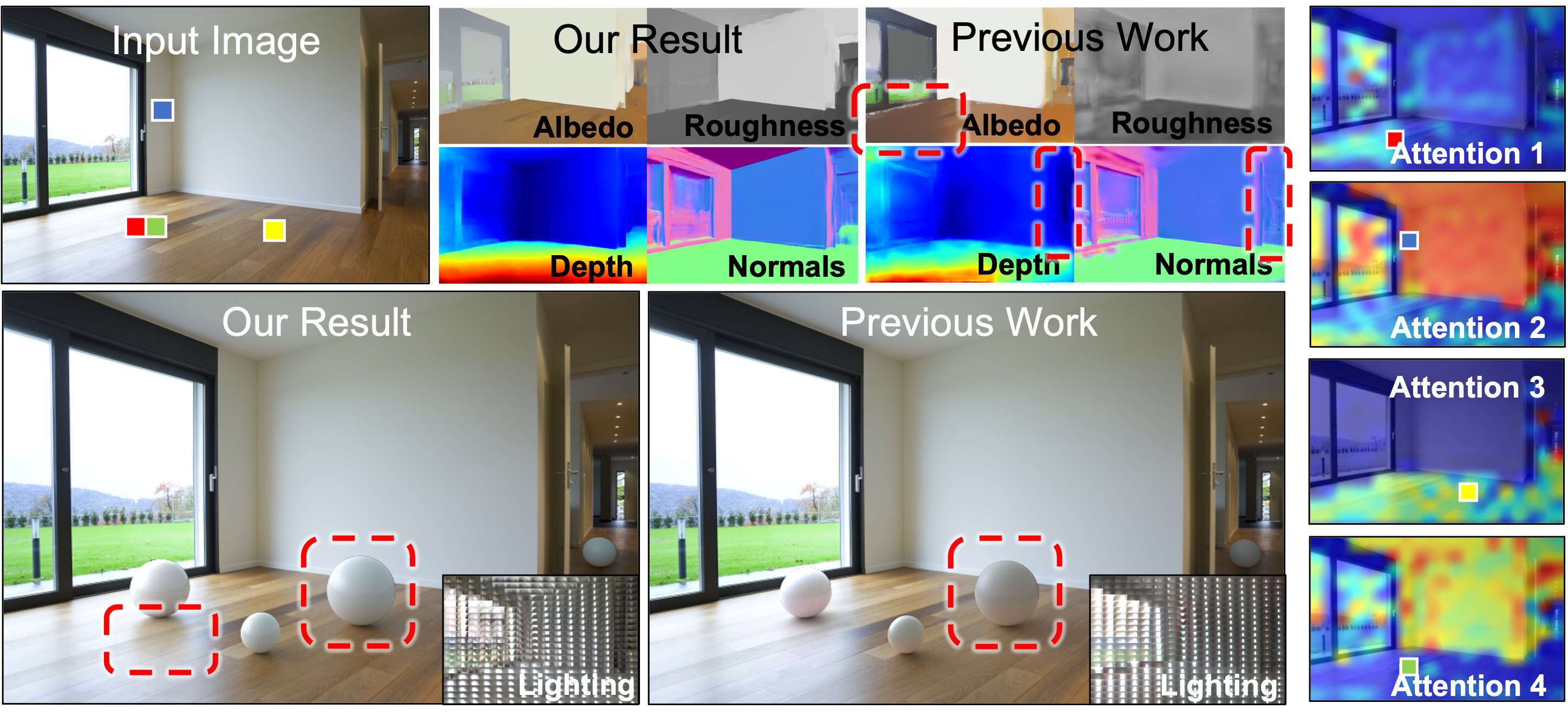

In this work, our intuition is that the long-range attention learned by transformer architectures is ideally suited to solve longstanding challenges in single-image inverse rendering. We demonstrate with a specific instantiation of a dense vision transformer, IRISformer, that excels at both single-task and multi-task reasoning required for inverse rendering. Specifically, we propose a transformer architecture to simultaneously estimate depths, normals, spatially-varying albedo, roughness and lighting from a single image of an indoor scene. Our extensive evaluations on benchmark datasets demonstrate state-of-the-art results on each of the above tasks, enabling applications like object insertion and material editing in a single unconstrained real image, with greater photorealism than prior works.

Highlights

- Given a single real-world image, inferring material (albedo and roughness), geometry (depth and normals), spatially-varying lighting of the scene.

-

Two-stage model design with Transformer-based encoder-decoders:

- multi-task setting: sharing encoder-decoders with smaller model;

- single-task setting: independent encoder-decoders with better accuracy.

- Demonstrating the benefits of global attention to reason about long-range interactions.

-

State-of-the-art results in:

- per-pixel inverse rendering tasks on OpenRooms dataset;

- albedo estimation on IIW dataset;

- object insertion on natural image datasets.